summary: Div2-Easy: Alice’s Birthday For a given , we have to partition the first fibonacci numbers in two set...... #### Div2-Easy: Alice’s Birthday

For a given , we have to partition the first fibonacci numbers in two sets with equal sum. The fact that means that if is a multiple of 3, then I can give to Charlie and to Eric, to Charlie and to Eric, so on and so forth eventually giving to Charlie and to Eric. The other two cases are and .

For the first case, let us try to re-use our previous strategy for the last boxes starting with going to Charlie and to Eric. In the end, we will be left with the first two boxes containing and , respectively. Since, so we can simply give one box to Charlie and other one to Eric.

For the second case, we can try our previous strategy but will always be left with one extra box. The fact that the sum of is odd means that we cannot divide them evenly among Charlie and Eric. Had it been possible to divide it equally, it would mean that the total sum is even, which is not possible and can be proven using induction.

Since we only have to run a loop till , so time complexity is .

Reference sol in Java

1 | import java.io.*; |

Reference sol in Python

1 | class AlicesBirthday: |

Div2-Medium: Bob the Builder

Suppose we are at the given height . At any step, we can either move to one of its factors or add to it. While we can move to its factors without any cost, we have to spend $ to add . So, basically, we have a given state, few possible options from there, and we want to find out end whether a specific end state (i.e. height ) is reachable or not. If reachable, we have to do so in minimum cost. This naturally appeals to be modeled by graphs with states representing the nodes and edges representing the transitions. Thus, we have to find the shortest path in a graph, so Dijkstra definitely comes as the first solution in mind.

One may use Dijkstra to solve the problem, but it is not immediately clear when to stop exploring if there does not exist any path. We would never actually need to go beyond (proof is shown below). But going so high, Dijkstra may get TLE. The fact that edge weights are only and means that we can use instead of Dijkstra and the time limit was high enough to allow that in case exploration goes so high. However, in practice, the max step explored before reaching a goal seems to be quite low (the worst case we could find was not more than ); hence normal Dijkstra passes easily.

Reference sol in Java using 0-1 BFS

1 | import java.util.*; |

Reference sol in C++ using Dijkstra

1 | #include <bits/stdc++.h> |

Proof

If we are at some state , then at anytime, we can go to one of its

factors (possibly itself) or add . We do this starting from until we

reach . Suppose it is possible to reach , then thinking about the

process in reverse is basically from we can go to one of its

multiple (possibly itself) or subtract and continue this loop

until we reach . Thus, we can model this in the following form:

Simplifying this, we reduce it to a well-known form:

So, we end up with a linear diophantine equation. Let

. This is only solvable when

so we can instantly return -1 if

is not a multiple of

. The fact that

have opposite signs means that this admits infinite positive solutions. From

Bezuot’s Identity

, we can always find a pair

such that

and

. So we can always find

lie in the range

and since infinite positive solutions are possible and we can shift

, so it’s always possible to find a pair

with both lying in

. Since

, so the max state we need to explore to ensure we always find a solution is bounded by

. Once we reach

from

, we can repeat the same process to reach

from

. While we did the derivation in the reverse form, it obviously holds in the forward manner starting from

. Some useful links:

Div2-Hard / Div1-Easy: The Social Network

We are given an undirected connected graph with exponential weights of the form , and we have to find its minimum cut. The problem may look intimidating but what is in favor for us is the fact that all edge weights are distinct.

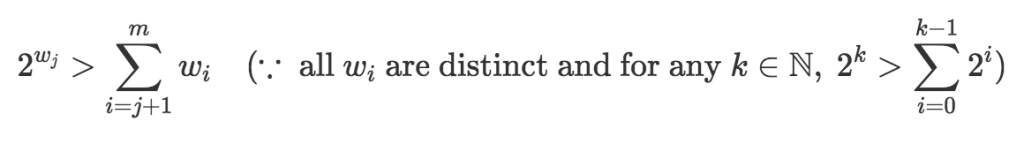

Let the given weights sorted in decreasing order be (i.e. edge weight is ). Then, for any valid between and , we have

So, we would like to keep the edge with the highest weight out of the cut edges set at the cost of ending up with all other edges in the cut since their total sum is strictly less than the highest weight. Thus, we can keep adding edges one by one in the graph (in decreasing order of their weight) as long as one whole connected component does not form.

The edges which do not get added in the graph form our cut edges, and their sum is the answer. This can be done by DSU but was not required since the constraints were significantly small. If we do use DSU, then sorting dominates the runtime with total complexity as .

Reference Sol in Java

1 | import java.util.*; |

Reference Sol in Python

1 | def pow2(n): |

Side Thought: We were wondering whether it is possible to solve this problem using max-flow?

Div1-Medium: Proposal Optimization

Such kind of ratio problems can be solved by using a niche trick. I think it is hard to develop this line of thinking unless you have encountered it before.

Let us say the optimal ratio is . Thus, . What we can do is a binary search on , and check if a ratio is feasible or not. So, for a given , we have to check whether is possible, which is the same thing as (let’s call the L.H.S. ). The constraints on seem to be quite high to do any form of DP while constraints on the grid’s dimension, are quite low although obviously not permitting brute force solutions. However, meet-in-the-middle looks promising since we can store while doing brute force from one corner of the grid and checking from another (meeting in cells lying on some sort of diagonal of the grid).

In fact, it is the intended solution, and we also have to take care of the costs along with to actually know if any of the options is actually feasible. So, when doing brute from one side, we store pairs on those diagonal cells and later sort them according to to ease our process in the next brute. While doing brute from the other end, we have , and we want the highest whose cost satisfies . By storing in prefix maximum manner, we can binary search on the highest index option with cost and take its (since we already sorted) to get our answer.

The best diagonal cells for a grid of size would be the set . Since x, so the number of such diagonal cells (let us denote that by ) can’t exceed . We can focus on the analysis of square matrix since it will dominate the rectangular grids. Let the number of binary search iterations required to find the optimal ratio be (80 iterations suffice for error). To reach a diagonal cell there are ways and then we have to binary search on those many paths which we got from first brute force. Thus, doing meet-in-the-middle takes

Hence, the total time required is bounded by

.

Reference Sol in C++

1 | #include <bits/stdc++.h> |

Reference Sol in Java

1 | import java.util.*; |

Div1-Hard: Tale Of Two Squares:

#div1 A number can be expressed as a sum of two sqaures if and only if all its prime factors of the form occur even times. So while taking the product of few numbers, we are only interested in its prime decomposition; hence, we can store a bit-vector for each \(A_{i}\) denoting odd/even occurrence of such prime factors.

From now on, **whenever I talk about primes it is assumed to be of

the form \(4K+3\) (for some \(k \in Z\) ) and that the reader is familar

with basis of a vector space and gaussian elimination. Please go through

this if you are not familiar with the usage of those concepts in

CP:

Introductory Blog on

using Linear Algebraic techniques to solve XOR related problems

Now, \(A_{i}≤10^7\) so # primes is around \(3∗10^5\). Normal Gaussian elimination would easily TLE, even with bitsets. Still, lets imagine doing Gaussian elimination traversing from the highest bit to the lowest and xoring when necessary. Notice that, when you are traversing, if any prime factor \(>\sqrt{ A_{i} }\) exists (at max one can exist), the moment you xor it, no more bits \(>\sqrt{ A_{i} }\) will be on anymore (since only one such was on earlier and now you have xored it). Now, you can simply simulate Gaussian elimination on lower-order primes (i.e. primes\(≤\sqrt{ A_{i} }\)) and handle that one highest bit (if it exists) separately. This way, combined with bitset for lower-order primes, solves the problem without queries.

For queries, let us process them offline. Let \(queries[l]\) store all the queries with their left endpoint as \(l\). We will insert \(A_{i}\) into our basis from the right side, \(i\) going from \(N−1\) to \(0\). After we have inserted the element at index \(i\), we will answer all the queries which begin their, i.e., the ones in \(queries[i]\).

We will use a simple fact about linear combination of vectors but in a clever manner to answer the queries. Suppose we are about to try and insert v into our \(Basis\) (may not insert if it turns out to be dependant). If \(v=b _{1}+b _{2}+⋯b_{k} (where b_{i}∈Basis)\), then we can remove one of the \(b_i\) and instead insert \(v\) into its place, keeping the basis intact. So, we will use this fact while inserting — we will try to keep such elements in the basis which have leftmost index so as to answer as many queries as possible. We can do this greedily: while inserting \(v\), whenever we are about to xor it with some \(b_i\), we will first check which has the least index and \(swap (b_i, v)\) if \(v\) ‘s index is smaller than \(b_i\). If we do end up swapping, then \(v\) becomes a part of the basis (in place of \(b_i\)), and we progress doing Gaussian Elimination with \(b_i\) instead. Otherwise, we move forward with v like we normally do. Please refer to the attached code for more details.

Now, when we answer queries which have left index \(i\), we will have the basis vectors from \(A[i: N]\) having the least possible index. So for a query \(i\) \(R\), we simply need to count how many vectors in our basis have index \(≤R\). This can be easily done with some BIT or set.

Let \(K\) denote the number of primes \(\leq \sqrt{ A_{i} }\)\((=225)\). We store a bitset of size \(K\) for every prime. While doing Gaussian elimination, we handle the large bit manually while following the normal procedure for lower-order primes; hence it takes \(O (\frac{K^2}{64})\) time to insert a \(A_i\). After that, we answer queries at index i in \(O (log (N))\) time by querying in our BIT. Hence, the overall complexity is \(O \left( N⋅ \frac{k^2}{64}+Q⋅log (N) \right)\), dominated by Gaussian elimination using bitsets:

一个数字可以被表示为两个平方数的和,当且仅当它所有形式为 \(4K+3\) 的质因数出现偶数次。所以,当我们计算几个数字的乘积时,我们只关心它的质因数分解;因此,我们可以为每个 \(A_{i}\) 存储一个位向量,表示这些质因数的奇数/偶数出现。

从现在开始,每当我谈到质数时,假定它们为形式为 \(4K+3\)(对于某个 \(k \in Z\)),并且读者熟悉向量空间的基础和高斯消元。如果您对在竞赛编程中如何应用这些概念不熟悉,请阅读这篇文章:使用线性代数技术解决异或相关问题的入门博客

现在,\(A_{i}≤10^7\),因此质数的数量大约为 \(3∗10^5\)。普通的高斯消元甚至使用位集合也可能很容易超时。不过,让我们假设从最高位到最低位进行高斯消元并在必要时进行异或操作。注意,当您遍历时,如果存在任何大于 \(\sqrt{ A_{i} }\) 的质因数(最多可以存在一个),一旦异或了它,就不会再有大于 \(\sqrt{ A_{i} }\) 的位了(因为之前只有一个位是打开的,现在您已经异或了它)。现在,您可以简单地模拟对低阶质数(即质数 \(≤\sqrt{ A_{i} }\))进行高斯消元,并单独处理那个最高位(如果存在的话)。这样,结合对低阶质数的位集合处理,就可以解决问题。

对于查询,让我们离线处理它们。让 \(queries[l]\) 存储所有左端点为 \(l\) 的查询。我们将从右侧将 \(A_{i}\) 插入到我们的基向量中,其中 \(i\) 从 \(N-1\) 到 \(0\)。在我们插入索引 \(i\) 处的元素之后,我们将回答所有在那里开始的查询,即 \(queries[i]\) 中的查询。

我们将使用一个关于向量的线性组合的简单事实,但以聪明的方式来回答这些查询。假设我们将要尝试插入 \(v\) 到我们的 \(Basis\)(如果结果是依赖的话,可能不会插入)。如果 \(v=b_{1}+b_{2}+\dots+b_{k}\)(其中 \(b_{i}∈Basis\)),那么我们可以删除 \(b_i\) 中的一个,并将 \(v\) 放在它的位置,保持基向量不变。因此,我们在插入时将使用这一事实。我们将尝试保留基向量中左端的索引,以回答尽可能多的查询。我们可以贪婪地实现这一点:在插入 \(v\) 时,每当我们要与一些 \(b_i\) 进行异或操作时,我们将首先检查哪个索引最小,如果 \(v\) 的索引小于 \(b_i\),我们将 \(swap(b_i, v)\)。如果最终进行了交换,那么 \(v\) 将成为基向量的一部分(代替 \(b_i\)),并且我们将使用 \(b_i\) 进行高斯消元。否则,我们将像正常情况一样继续进行。更多细节,请参考附加的代码。

现在,当我们回答左端点为 \(i\) 的查询时,我们将拥有基向量 \(A[i:N]\),其具有可能的最小索引。因此,对于一个查询 \(i\) 到 \(R\),我们只需要计算基向量中索引 \(≤R\) 的数量。这可以很容易地使用一些 BIT 或 set 完成。

让 \(K\) 表示 \(\leq \sqrt{ A_{i} }\) 的质数的数量(\(=225\))。我们为每个质数存储一个大小为 \(K\) 的 [[../ACM/bitset]] 。在进行高斯消元时,我们手动处理大位数,然后按照常规程序处理低阶质数;因此,插入 \(A_i\) 花费 \(O (\frac{K^2}{64})\) 的时间。之后,在 \(O (log (N))\) 的时间内回答 \(O (log (N))\) 中的查询。因此,总体复杂度是 \(O \left( N⋅ \frac{k^2}{64}+Q⋅log (N) \right)\),由使用 [[../ACM/bitset]] 的高斯消元支配。

Reference sol in C++

1 |

|